Max Zimmer

PhD candidate in Mathematics at TU Berlin

Advisor: Prof. Dr. Sebastian Pokutta

Research

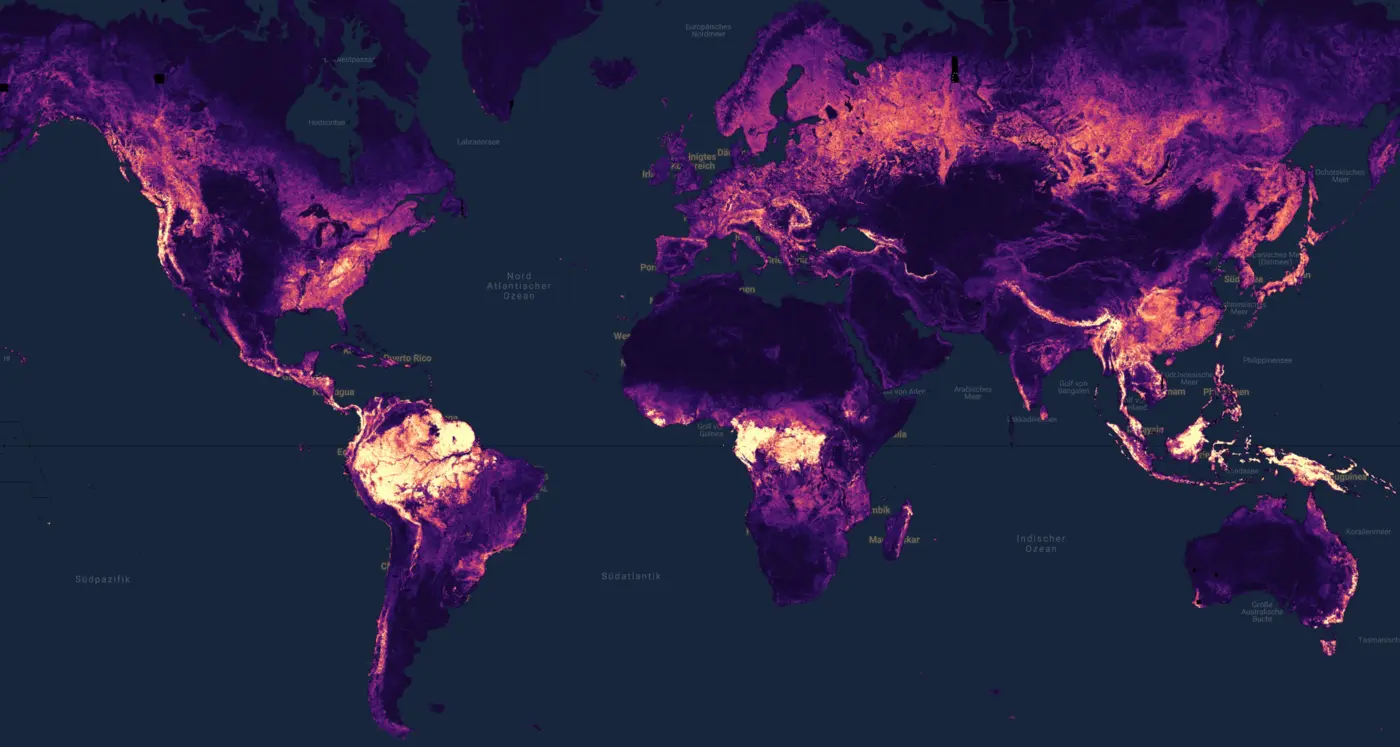

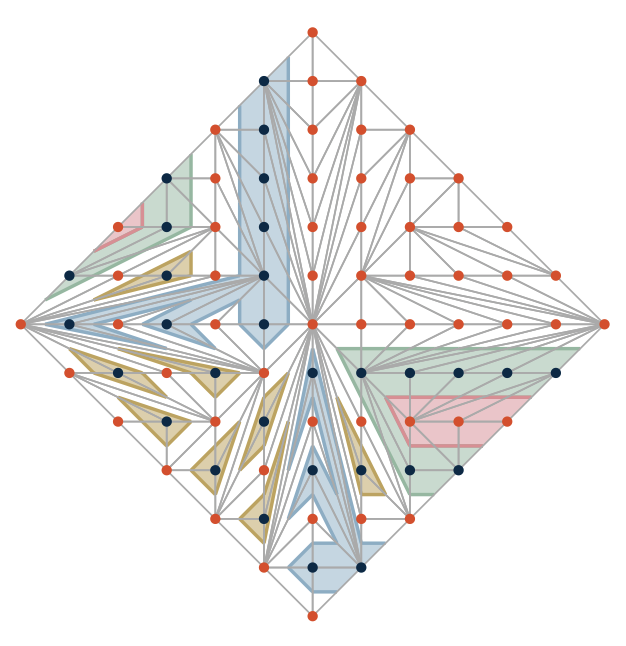

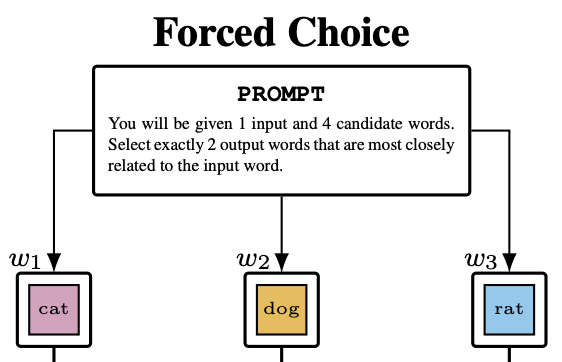

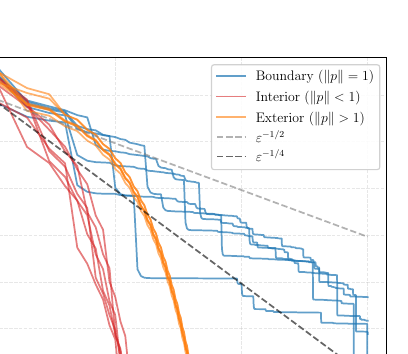

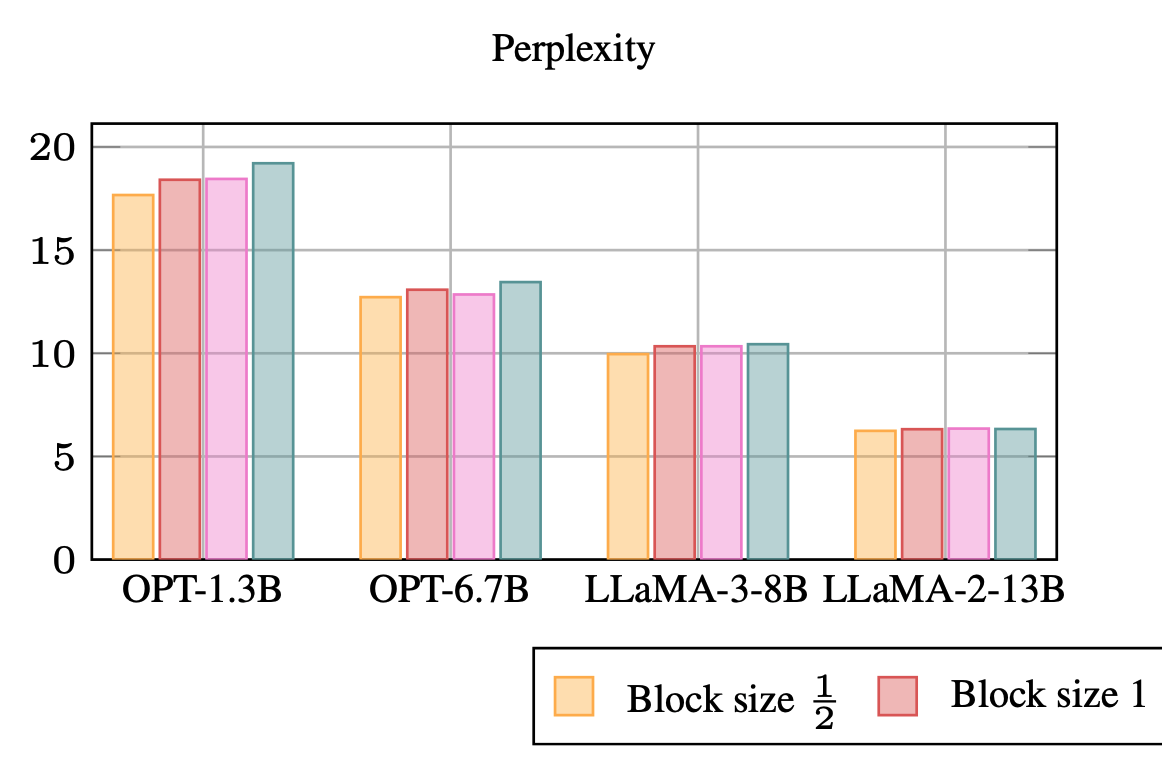

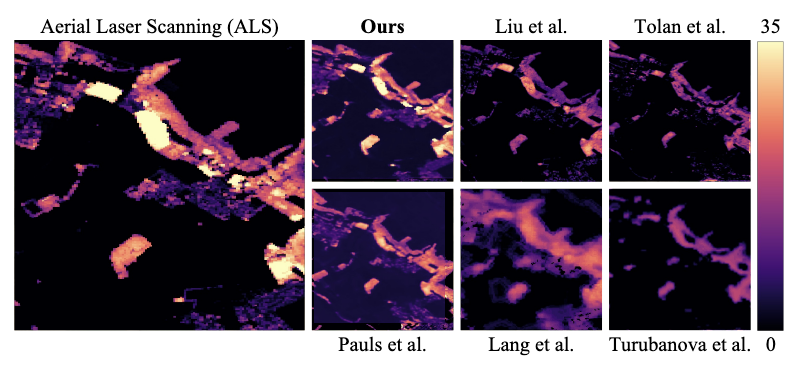

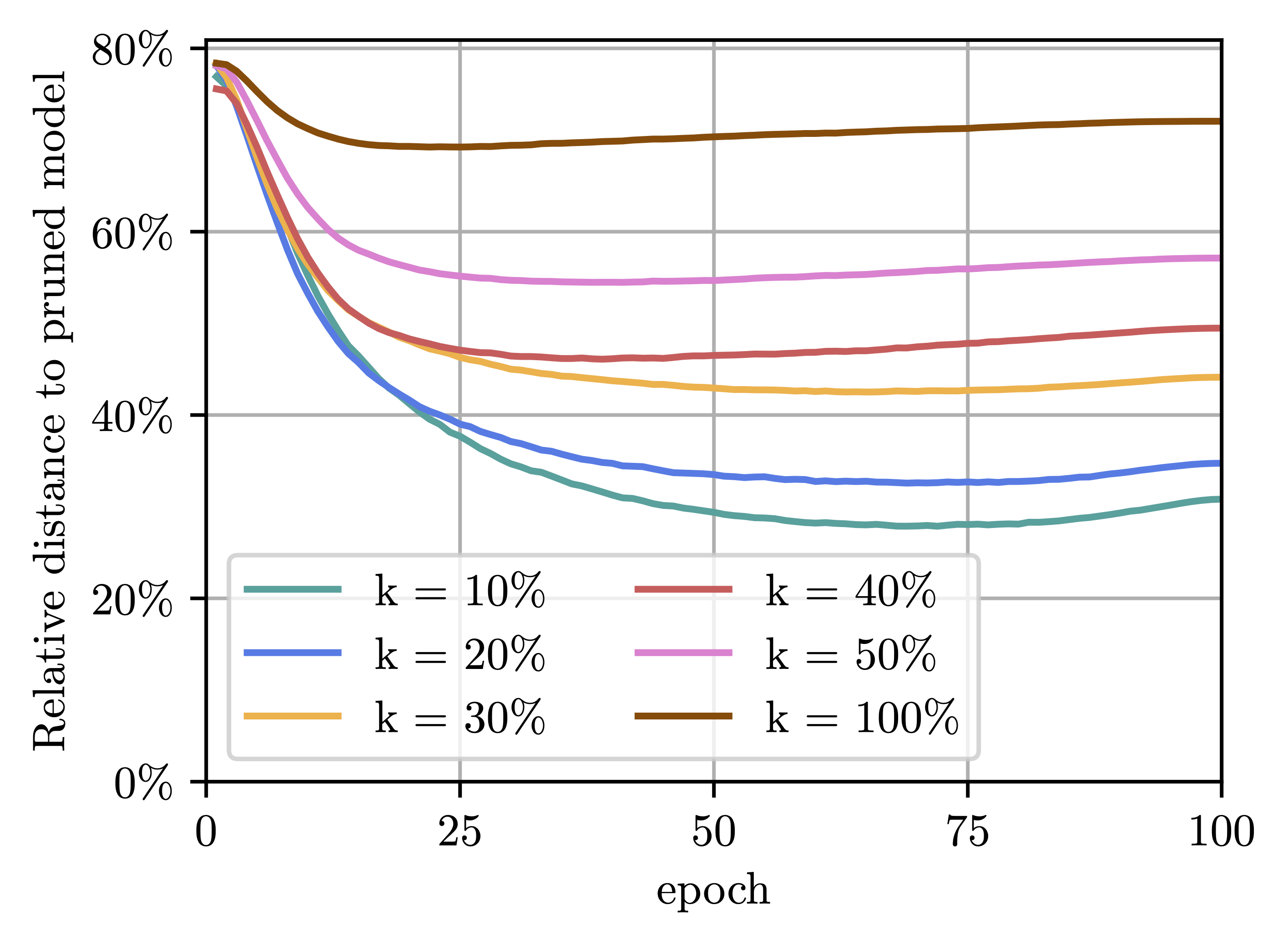

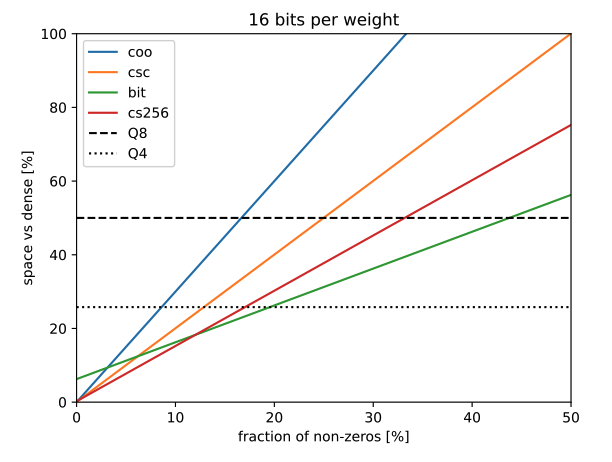

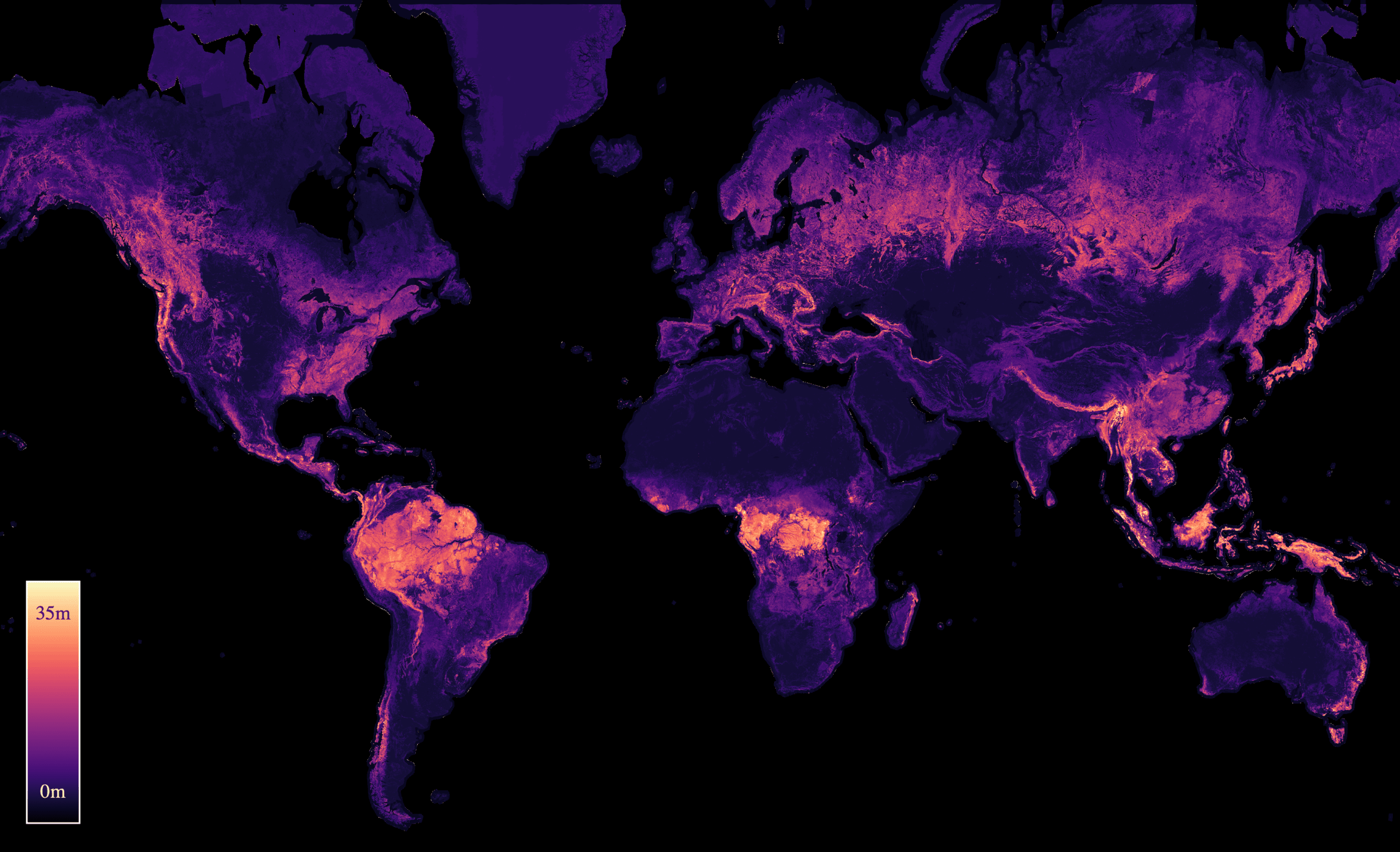

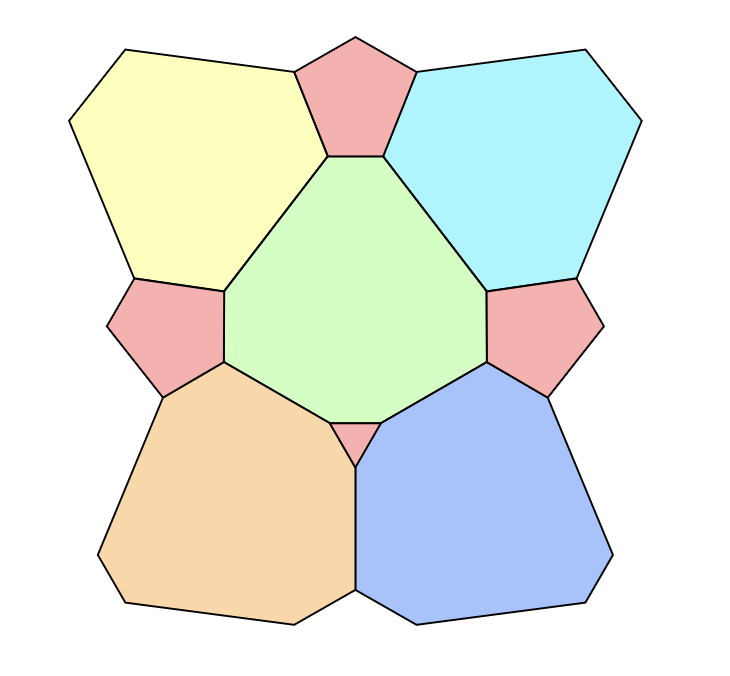

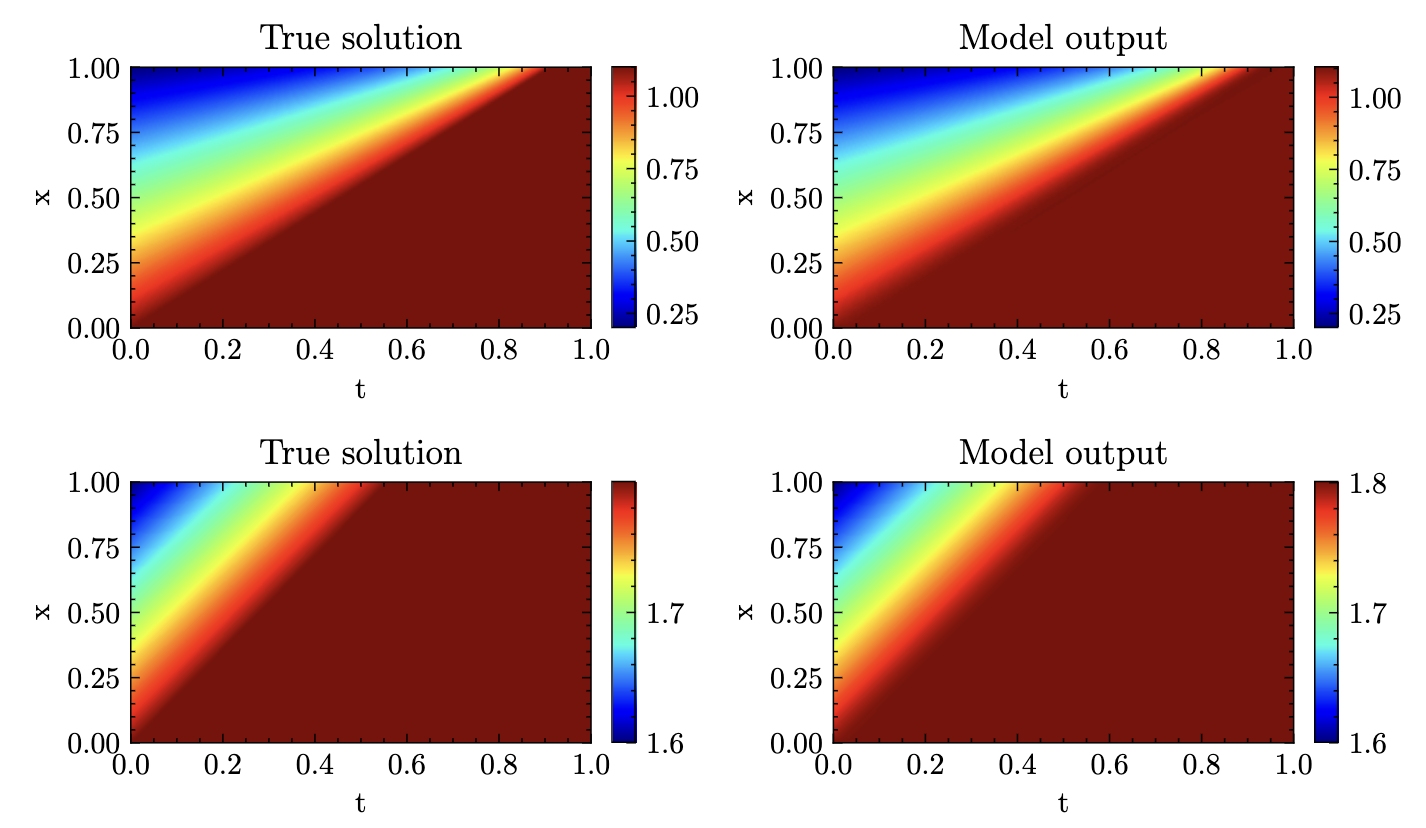

My research focuses on the efficiency of neural networks — from weight sparsity and quantization to architectural efficiency and speculative decoding. I’m also very interested in using Deep Learning for scientific discovery (AI4Science/AI4Math) and to address sustainability challenges such as forest monitoring. Further topics include Optimization and Agentic AI. At ZIB, I lead the iol.LEARN Deep Learning group. Please take a look at my list of publications and feel free to reach out for questions or potential collaborations! You can find TLDRs of some of my papers on my blog and a collection of interesting links and AI news here.

Previously

I studied Mathematics at TU Berlin (BSc 2017, MSc 2021), with a semester abroad at the Università di Bologna. During my studies, I worked on combinatorial optimization with Leon Sering at the COGA Group and interned in the research groups of Prof. Sergio de Rosa at Università degli Studi di Napoli Federico II and Prof. Marco Mondelli at IST Austria. I joined the IOL Lab in 2020 as a student researcher, became a doctoral researcher in 2021, and have led the Deep Learning research group since 2024. I am also a member of the Berlin Mathematical School and the MATH+ Cluster of Excellence. You can find my full CV here.

latest news [see all]

latest publications [see all]

-

PreprintarXiv preprint arXiv:2602.21421 2026

PreprintarXiv preprint arXiv:2602.21421 2026 -

-

PreprintarXiv preprint arXiv:2602.00628 2026

PreprintarXiv preprint arXiv:2602.00628 2026 -

PreprintarXiv preprint arXiv:2602.04378 2026

PreprintarXiv preprint arXiv:2602.04378 2026 -

ICLR26 The Fourteenth International Conference on Learning Representations 2026

ICLR26 The Fourteenth International Conference on Learning Representations 2026 -

ICLR26 The Fourteenth International Conference on Learning Representations 2026

ICLR26 The Fourteenth International Conference on Learning Representations 2026 -

PreprintarXiv preprint arXiv:2512.10922 2025

PreprintarXiv preprint arXiv:2512.10922 2025 -

PreprintarXiv preprint arXiv:2512.10507 2025

PreprintarXiv preprint arXiv:2512.10507 2025 -

PreprintarXiv preprint arXiv:2510.14444 2025

PreprintarXiv preprint arXiv:2510.14444 2025 -

PreprintarXiv preprint arXiv:2510.13713 2025

PreprintarXiv preprint arXiv:2510.13713 2025 -

ICML25 Forty-second International Conference on Machine Learning 2025

ICML25 Forty-second International Conference on Machine Learning 2025 -

Journal Mathematical Optimization for Machine Learning 2025

Journal Mathematical Optimization for Machine Learning 2025 -

Workshop ICLR25 Workshop on Sparsity in LLMs (SLLM) 2025

Workshop ICLR25 Workshop on Sparsity in LLMs (SLLM) 2025 -

ICML24 Forty-first International Conference on Machine Learning 2024

ICML24 Forty-first International Conference on Machine Learning 2024 -

-

Workshop ICLR24 Workshop on AI4DifferentialEquations In Science 2024

Workshop ICLR24 Workshop on AI4DifferentialEquations In Science 2024 -

PreprintarXiv preprint arXiv:2312.15230 2023

PreprintarXiv preprint arXiv:2312.15230 2023